What you need to know

- Meta announced Aria Gen 2 glasses on Thursday, a research-focused design for “machine perception, egocentric and contextual AI, and robotics.”

- These glasses have SLAM and eye-tracking cameras, force-canceling speakers, mics that distinguish between voices, continuous HR, and GNSS location tracking.

- They weigh 75g — less than the 98g Orion but more than the 50g Ray-Ban Metas — and last 6–8 hours with no apparent wire.

- Academic and commercial research labs can start testing Aria Gen 2 in early 2026, but they won’t ship to consumers.

As we wait impatiently for Meta to release its next generation of AR and smart glasses, Meta is tantalizing us with its new Aria Gen 2 glasses that very few people will ever get to try — but sound technically impressive and make us excited for the inevitable consumer version.

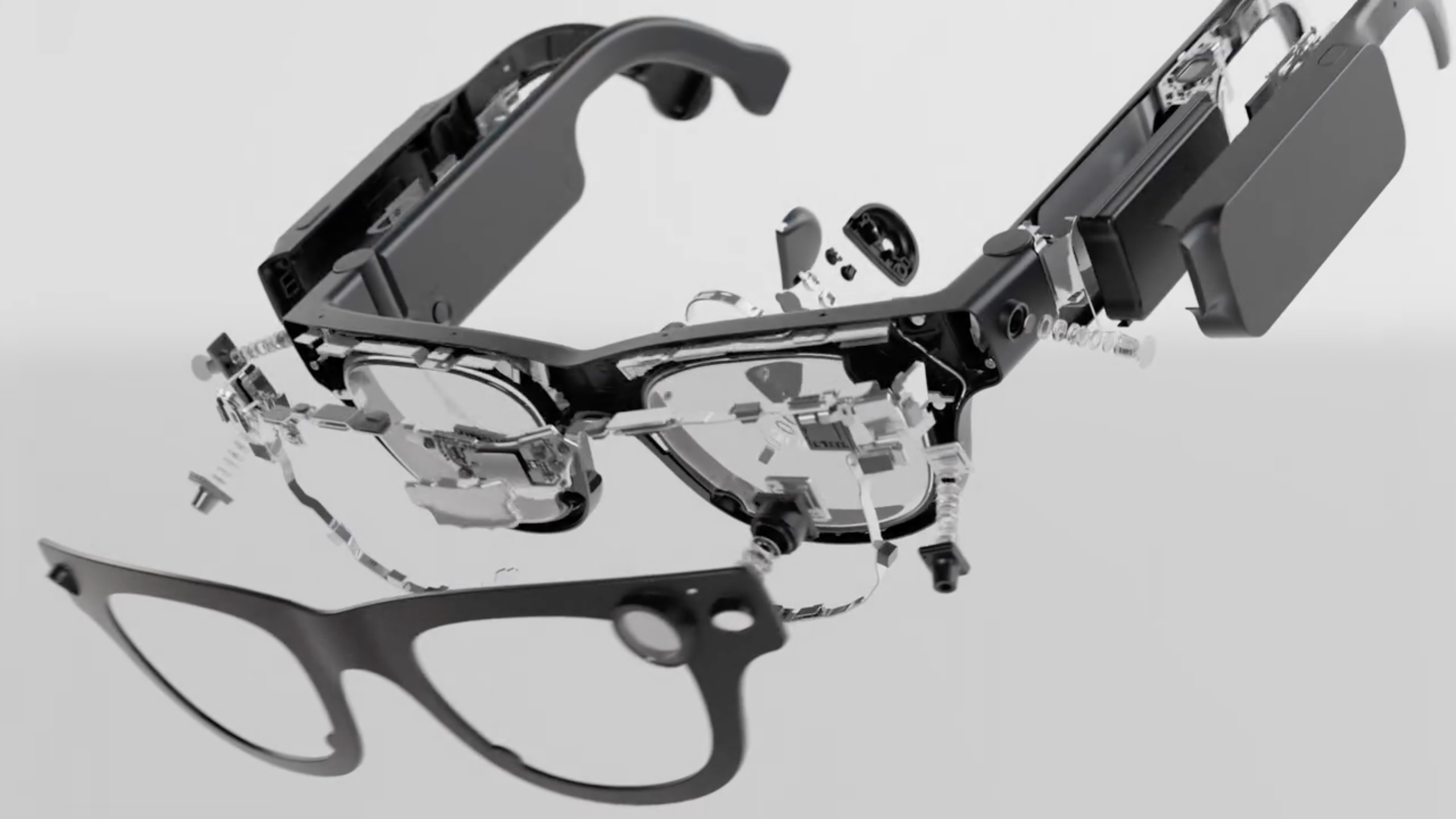

A follow-up to Meta’s original Aria glasses released in 2020, the Aria Gen 2 glasses have no AR holographic capabilities but are crammed full of RGB, 6DOF SLAM, eye-tracking cameras, and even GPS tracking to interpret the world and your body’s gaze and actions.

On the Meta blog, you can see a pair of videos of the Aria Gen 2 in action, including an impressive demo of a visually impaired woman using the familiar “Hey Meta” command to have Aria Gen 2 guide her with audio cues to the produce section of a grocery store to find apples. It looks like a next-gen version of the Be My Eyes accessibility tool for Ray-Ban Meta smart glasses.

“Project Aria from the outset was designed to begin a revolution around always-on, human-centric computing,” says Richard Newcombe, VP of Meta Reality Labs Research. The first-gen version was recently used to provide visual data for a robot in order to help it learn to perform human tasks, translating what we normally see and hear into data a robotic AI can use.

These smart glasses use custom Meta silicon to process SLAM, eye tracking, hand tracking, and speech recognition on-device. And they last 6–8 hours — a 40% boost over the last gen — with no weight increase and looking significantly skinnier than the Meta Orion AR glasses.

They’re still heavier than Ray-Ban Meta smart glasses, with noticeably thick arms, but might blend in as somewhat “normal” glasses when the camera light isn’t on, and seemingly doesn’t need any kind of wireless puck like Project Orion.

“With Gen 2 glasses, we are building AI capabilities with a deeper understanding of the wearer’s context and environment,” says Mingfei Yan, Director of Project Management for AR research.

The Gen 2 version adds a PPG in the nosepad for continuous heart rate data, as well as a contact microphone “to distinguish the wearer’s voice from that of bystanders.”

Meta is largely offering Aria Gen 2 glasses to researchers and students, allowing them to take this raw stream of multisensor data and do whatever they might think of. You can sign up to try out Aria Gen 2 at that link.

Recent leaks suggest that Meta is working on new Oakley smart glasses, a $1,000 Hypernova prototype with AR capabilities, and a follow-up to Orion codenamed Artemis. Meta won’t sell the Aria Gen 2, but some of its capabilities, sensors, and Meta AI upgrades could easily end up in new Meta consumer devices in the next year or two.

For now, smart glasses like Ray-Ban Meta and Xreal One are helping us cope with the wait, and we’ll have to see how much Aria Gen 2 DNA ends up on display at Meta Connect 2025.