What you need to know

- Google was spotted testing a new set of text-based options for images captured using Circle to Search.

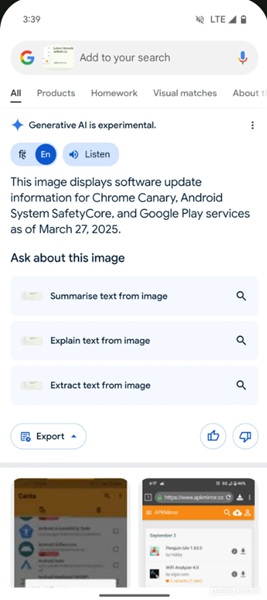

- These choices will appear in the provided AI Overview and will let users summarize or extract text found within the photo, as well as an “explain” option.

- Google highlighted AI Overviews for Circle to Search earlier in 2025 when the Galaxy S25 debuted.

Google might have a few upgrades for Circle to Search and how it delivers results for users.

In an APK dive by Android Authority, the publication reportedly discovered a trio of new options for Circle to Search during a query. What was discovered — three new “chips” — supposedly concern any AI Overviews that appear when capturing “lengthy text” in Circle to Search. In the provided demo, the company highlighted the three new options: Explain text from image, Summarize, and Extract.

To “explain,” the overview highlights it as a news article, as well as its title and who the post was written by. The AI-generated blurb also links to the website the snapshot is from but not the article itself.

What’s more, the overview gets a little detailed about the images, too, explaining what all is present as best as it can.

The summarization feature for captured Circle to Search snapshots seemingly took in more than was actually captured. In the demo, the publication only highlighted one specific title; however, the summarization feature knew more and briefly mentioned several more posts on the page. It’s more likely this feature will function similarly to how Gemini’s summarization function works in other places.

The “extraction” chip merges its base function with a smaller summary. In short, the AI Overview will attempt to extract several text-based elements from the Circle to Search photo in a semi-organized way. Since the publication took a photo from a website, the overview broke things down into “Top Section,” “News Section,” and “Bottom Section (Phone Screen)” categories.

Right now, the new chips were spotted in the Google app’s beta v16.11.36. The post speculates these chips could arrive when scanning items with Google Lens, but nothing’s confirmed as the company pushes through development.

AI Overviews in Circle to Search captured imagery were announced earlier this year with the Galaxy S25 series. The AI-based blurbs will explain what the image is with a few key bullet points and citations for fact-checking (per usual). However, users retain the ability to add a written query alongside the image after the overview appears.

Also, it seems what’s been discovered in the latest Google app beta is yet another expansion of the company’s “one-tap actions.” Originally, these quicker functions involved identifying/calling phone numbers, email addresses, and URLs. Google said during January’s update that if its overview detects one of these aspects, users will see a chip they can tap to quickly visit a website or contact its personnel.